- awesome MLSS Newsletter

- Posts

- It's good to be plastic - Awesome MLSS Newsletter

It's good to be plastic - Awesome MLSS Newsletter

5th Edition

We’ve all heard the refrain: LLMs don’t really “think” — they process one token at a time, without any true understanding.

To address this, we built reasoning models. These are trained to first plan a solution strategy, then work through a problem step by step. But even this is a simplification. Human beings — and human brains — don’t operate that way in full either.

Our brains don’t rely on a fixed set of weights waiting for the right input. They adapt constantly, learning as they go. Neurons only operate in binary terms — fire or don’t — but together they build a system capable of continuous learning and reconfiguration.

There’s growing research aimed at making artificial networks more like this: plastic, in the sense that they can adapt and learn in real time.

We’ll explore that next — right after these updates.

Upcoming Summer School Announcements

Make sure to apply to them before the application deadline!

Title | Deadline | Dates |

Oxford Machine Learning Summer School (OxML): MLx Health & Bio 2025 - Oxford, UK | Aug 02 | Aug 2 - Aug 5 |

Oxford Machine Learning Summer School (OxML): MLx Representation Learning & GenAI 2025 - Oxford, UK | Aug 07 | Aug 07 - Aug 10 |

4th European Summer School on Quantum AI 2025 - Lignano Sabbiadoro, Italy | Aug 01 | Sep 01 - Sep 05 |

For the complete list, please visit our website

What’s happening in AI?

Artificial Neural Networks were inspired by the brain, but they behave quite differently. Biological neurons fire in response to dynamic electric signals, making the brain a constantly evolving system — unlike today’s mostly static AI models.

What if our models could learn continuously, instead of relying on periodic updates? Fine-tuning is costly and complex, but several efforts are underway to build systems that more closely mimic how the brain actually learns.

Spiking Neural Networks

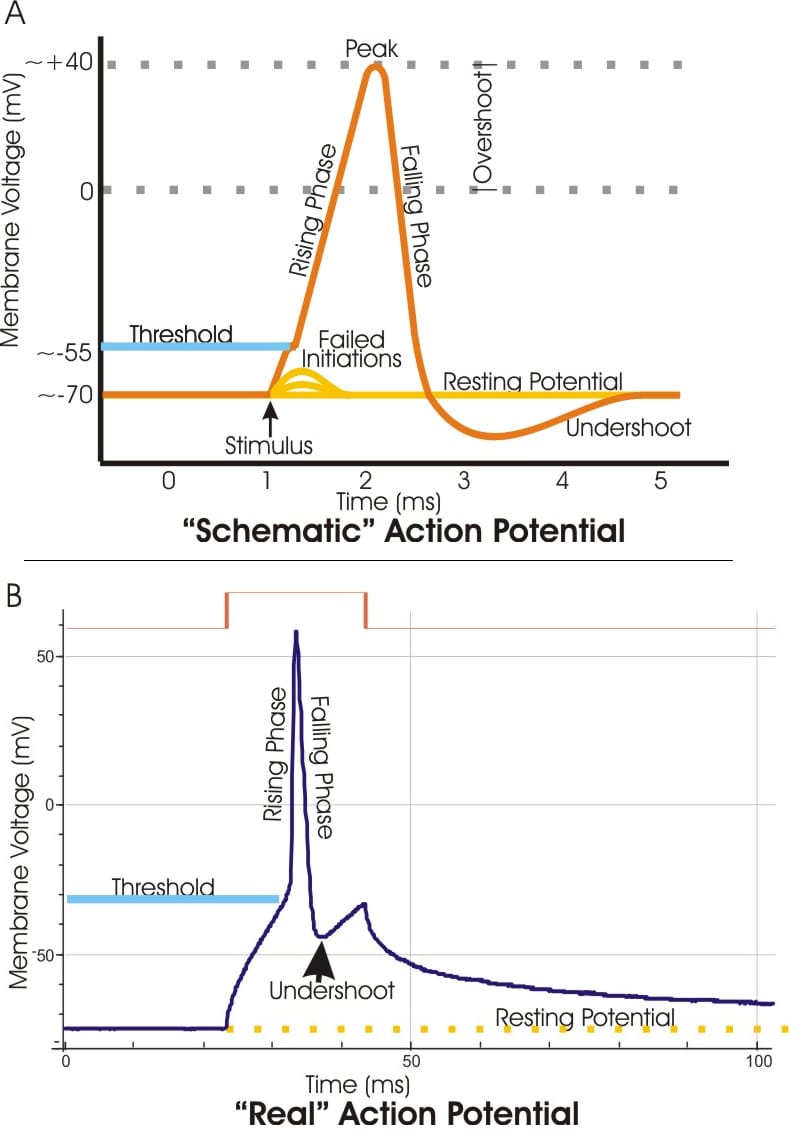

Spiking Neural Networks have been around for a while. One of the earlier formulations was the one by Wolfgang Maass in 1997. Spiking Neural Networks, like biological neurons, only fire when it reaches a certain activation potential, dependent on multiple factors, including time, potential received from past neurons, among others. This means that the whole network can learn - memorise patterns, solve mazes etc. without ever being trained for it.

Example of how biological neurons achieve activation potential, By Artwork by Synaptidude at en.wikipedia - From en:Image:Action potential vert.png; Modified version of older Image:Action potential reloaded.jpg., CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=1090445

There were, however, some challenges. Backpropagation wasn’t really possible, since a spike function is a step function, therefore non-differentiable. Additionally, owing to the sparsity of neurons firing, the learning processes weren’t very stable, and achieving stable large scale models was difficult.

Over the years, many of these issues have been fixed. Without going into too much detail, we now have mechanisms for achieving backpropagation on SNNs by using either surrogate gradients, or transferring learned weights from an ANN to an SNN, among other techniques. There are also considerable advancements in neuromorphic compute chips, hardware specific to SNNs, which are considerably more power efficient than GPUs.

In 2023, a research team from UC Santa Cruz finally managed to build a full fledged language model using SNNs, called SpikeGPT. They incorporated a lot of ideas from more recent transformer optimisations such as RWKV, and implemented them with SNNs. While it isn’t yet at the point where it can compete with the LLMs we use on a daily basis, there is one important fact to highlight - it consumes 22 times less power than a transformer network of a similar size.

This is a massive feat, especially considering the environmental concerns posed by large scale use of LLMs. It also opens up the possibility of enabling large scale models on low powered systems such as mobile phones.

Continuous Thought Machines

Just this year, Sakana.ai released a new neuronal architecture called the continuous thought machine. Once again, inspired more directly by how the human brain actually works, continuous thought machines essentially create one single latent space, and then reprocess it similar to how SNNs function, over multiple time steps, maintaining plasticity. This link also contains a live demonstration for maze solving by a CTM.

Let’s get a quick refresher on how Recurrent Neural Networks function. In recurrent networks, we basically employed some way to retain past information from a sequence. There were many neural architectures, LSTMs, GRUs, etc. but the ultimate requirement was that of maintaining past information while continuing to process incoming data. Eventually, they were superseded by transformer networks, which became the de-facto standard, owing to their generalisability across both sequential and non sequential data.

CTMs take the idea of recurrence, and combine it with another factor - time. Within the CTM, there are several Neuron Level Models (NLMs), each of which is operating similar to the brain or a CPU, on clock cycles. In each cycle, the NLM processes the incoming data along with the history of the past data, combined in a novel fashion referred to as Neural Synchronisation in their technical report. Through the combination of both recurrence, and time based dynamics, the overall model effectively becomes capable of ‘thinking’

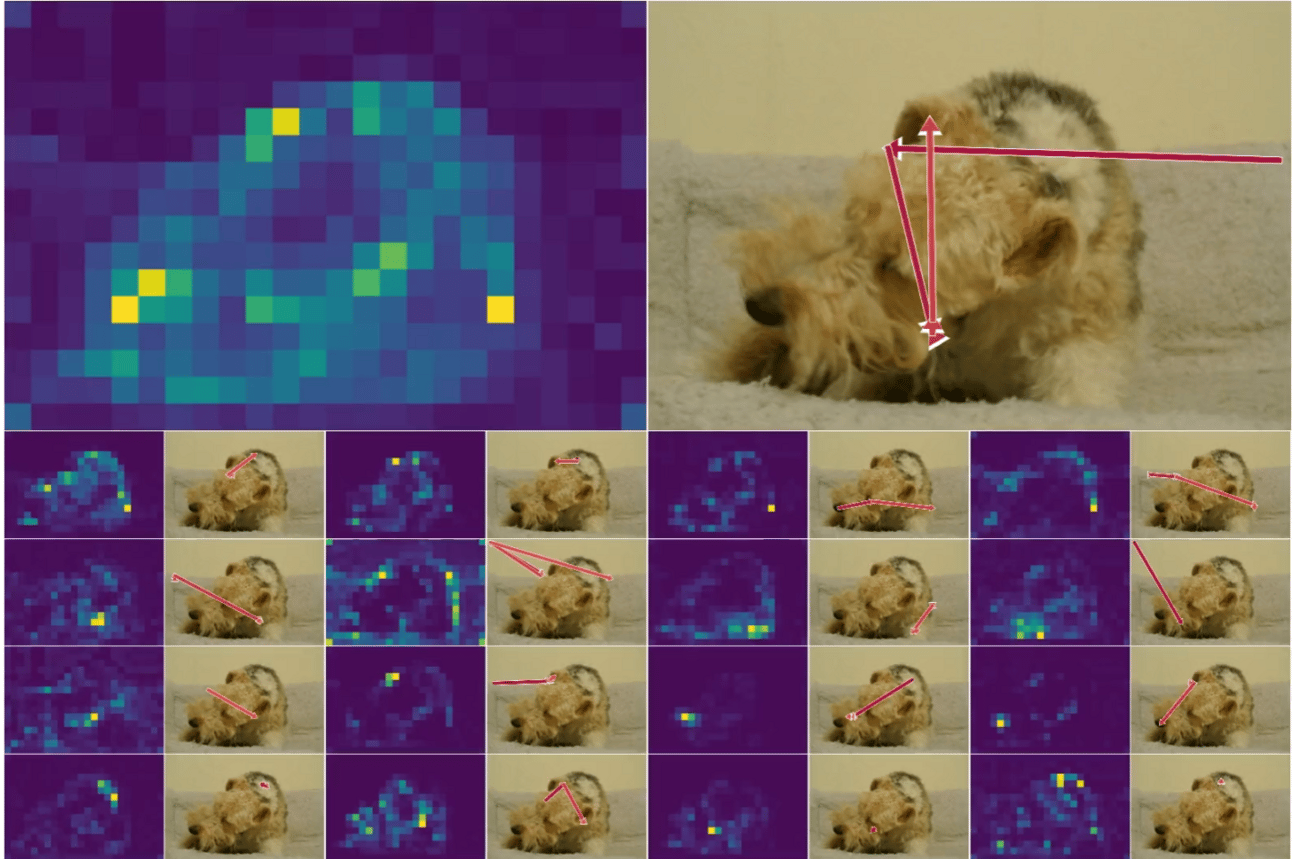

Example of CTM applying attention over multiple steps to classify animal, image source: https://pub.sakana.ai/ctm/

In the experimental section, they also show, and note how the CTM when classifying images to distinguish between animals, utilises multiple different features, such as eyes, facial structure, etc. (studied via attention mapping) before coming to a conclusion as to what animal it is, akin to what a human would do as well.

These were just a few examples of the kinds of brain inspired architectures we came across, but there are many more being explored at the moment.

Are there any architectures you’d like us to explore in our next edition? Do let us know!

Awesome Machine Learning Summer Schools is a non-profit organisation that keeps you updated on ML Summer Schools and their deadlines. Simple as that.

Have any questions or doubts? Drop us an email! We would be more than happy to talk to you.

Reply